This post was written by Marcus Munafò, University of Bristol, Malcolm Macleod, University of Edinburgh, they are on the steering group of the UK Reproducibility Network and Malcolm Skingle, director of academic liaison at GlaxoSmithKline.

Improved upstream quality control can make research more effective, say Marcus Munafò and his colleagues.

Science relies on its ability to self-correct. But the speed and extent to which this happens is an empirical question. Can we do better?

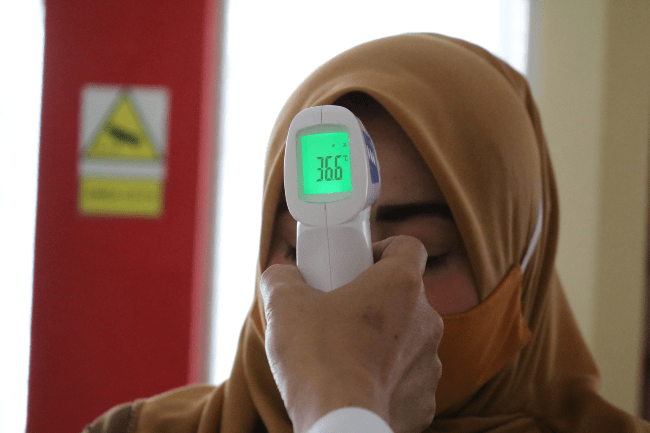

The Covid pandemic has highlighted existing fault lines. We have seen the best of scientific research in the incredible speed at which vaccines have been developed and trialled. But we have also seen a deluge of Covid-related studies conducted in haste, often reflected in their less-than-ideal quality.

Peer review, the traditional way of assessing academic research, occurs only after work has been done. Can we identify indicators of research quality earlier on in the process when there is more opportunity to fix things? And if we did, could scientific knowledge be translated into societal benefit more rapidly and efficiently?

In many ways, the cultures and working practices of academia are still rooted in a 19th-century model of the independent scientist. Many research groups are effectively small, artisanal businesses using unique skills and processes.

This approach can yield exquisitely crafted output. But it also risks poor reproducibility and replicability—through, for instance, closed workflows, closed data and the use of proprietary file formats. Incentive structures based around assessing and rewarding individuals reinforce this, despite the welcome shift to team-based research activity, management, dissemination and evaluation.

Lessons from industry

Research needs a more coherent approach to ensuring quality. One of us has previously argued that one way to achieve this would be to take the concept of quality control used in manufacturing and apply it to scientific research.

Pharmaceuticals are one R&D-intensive industry that has worked hard to improve quality control and ensure data integrity. Regulatory frameworks and quality-assurance processes are designed to make the results generated in the early stages of drug development more robust.

Indeed, some of the early concerns about the robustness of much academic research—described by some as the ‘reproducibility crisis’—emerged from pharmaceutical companies.

For regulated work, major pharmaceutical companies must be able to demonstrate the provenance of their data in fine detail. Standard operating procedures for routine work, and extended description of less common methods and experiments, makes comparisons between labs easier and improves traceability.

Data constitute the central element of robust research. The integrity of the systems through which data are collected, curated, analysed and presented is at the heart of research quality. National measurement institutes, including the UK’s National Physical Laboratory and National Institute for Biological Standards and Control have a role to play, sharing best practice and developing protocols that contribute to international standards.

How well these systems perform depends on many factors: training in data collection and management; transparency to allow scrutiny and error detection; documentation, so that work can be replicated; and standard operating procedures to ensure a consistent approach.

Red-tape review

Academic researchers are increasingly keen to learn from industry, and vice versa—to identify best practice and ways to implement higher standards of data integrity. University and industrial research are very different, but academia can learn lessons and adopt working practices that might serve to improve the quality of academic research in the biomedical and life sciences.

Learning from other sectors and organisations is a central theme of the UK Reproducibility Network. The network, established in 2019 as a peer-led consortium, aims to develop training and shape incentives through linked grassroots and institutional activity, and coordinated efforts across universities, funders, publishers and other organisations. This multilevel approach reduces the cost of development and increases interoperability, for example, as researchers move across groups and institutions.

Given the likely future pressures on the UK’s R&D budget, effective and efficient ways to bolster research quality will be essential to maximising the societal return on investment. Simply encouraging, or even mandating, new ways of working is not sufficient—many funders and journals have data-sharing policies, for example, but adherence is uneven and often unenforced.

A coordinated approach will require a clear model of research quality; buy-in from institutions, funders and journals; infrastructure; training; the right incentives; and ongoing evaluation. Coordinating all these elements will be challenging, but it is essential to improving research quality and efficiency. We need to take a whole-system approach.

This also applies to the independent review into research bureaucracy recently announced by the UK government, charged with identifying how to liberate researchers from admin. This is laudable—academia should certainly not be regulated in the same way as the pharmaceutical industry—but the review should recognise that an ounce of prevention can save a pound of cure.

Developing and deploying systems that improve research quality might increase efficiency and reduce research waste, as well as securing greater value for our national research effort.

This blog post was originally posted on Research Fortnight, you can read the original article here.